In the realm of conversational artificial intelligence (AI), maximizing token usage and retaining contextual information is essential for achieving an optimal user experience. By effectively managing tokens within the given context, developers can extend the conversation length and enhance the overall conversational experience. This article explores best practices for token optimization and a method that enables users to maintain long conversations while optimizing token usage and preserving crucial contextual information.

Before we dive into the optimization part, let’s try to understand what tokens are in OpenAI APIs, how OpenAI tokens work, and what OpenAI tokens actually mean.

Tokens can be likened to fragments of words, serving as the building blocks for language processing. Before the API processes the prompts, the input is divided into these tokens. However, tokens do not necessarily align with our conventional understanding of word boundaries, as they can encompass trailing spaces and even partial words.

To shed light on token lengths, OpenAI offers some practical rules of thumb for better understanding:

| Tokens to Words | Words to Tokens |

| 1 token ≈ 4 chars in English1 token ≈ ¾ words100 tokens ≈ 75 words | 1-2 sentences ≈ 30 tokens1 paragraph ≈ 100 tokens1,500 words ≈ 2048 tokens |

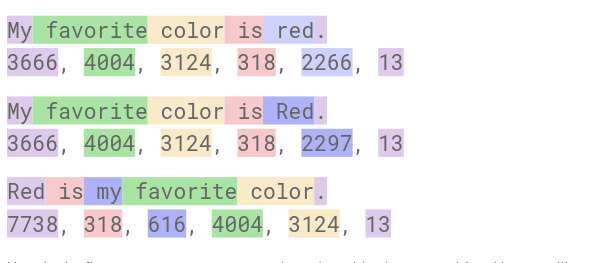

The tokens generated for seemingly identical words can vary based on their structural arrangement within the text. Let’s understand with an example.

Here, in the first sentence, you can see “red” starting with a lowercase ‘r’ and having a trailing space. The second sentence begins with an uppercase ‘R’ and also has a trailing space. In the third sentence, “red” starts with an uppercase ‘R’ but lacks a trailing space.

To us, all the sentences have the same meaning, but to a GPT model, each word and its placement and letters have a different meaning with different tokens. The more probable use of a token is, the lower the number is assigned to a token. In all three sentences, the token assigned to the period is consistently 13. This is due to the similar contextual usage of the period observed across the corpus data. But in the case of Red, we have different tokens each time as tokens with and without a trailing space are different in terms of GPT training.

Meaning ‘red’ != ‘ red‘ (red with space before)

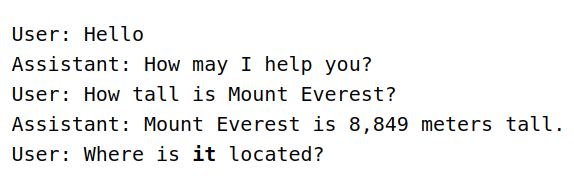

You may think that whatever message you sent previously will be remembered by the OpenAI APIs, but because the ChatGPT generates the next answer based on previous conversations, you have to share the entire conversation via API back and forth.

For example, if you are using an API to chat with a GPT model and have the following conversation.

Here, in order for the GPT model to understand that by the word “it” you are referring to “Mount Everest”, you will have to send the entire conversation back to the OpenAI. This means to have a contextual conversation, you will have to carry the entire conversation i.e. all 5 messages with an API Payload.

Also read: Mastering the Fundamentals of Deep Q-Learning

Here’s a sample prompt. Here we explain the GPT model that is supposed to explain Object Oriented Programming concepts to the User.

Mode: Chat Model: GPT-3.5-Turbo Temp: 1 Max Length: 256 Top P: 1

As you can see from the above sample prompt, a student is learning object-oriented programming concepts from AI. Now we have instructed an AI that it will be asked to summarize the conversation to remember for next time.

See the full conversation here.

For conversation for that user, the initial conversation until the user learned about Inheritance and Abstraction, usage was as follows.

Now if the user continued with the same conversation, meaning if we continued sending the next query without omitting previous messages and adding a summary, it would have used tokens as per the below image.

See the full conversation here.

But by providing the last conversation summary in the system prompt, you can see that used tokens went down significantly without affecting the user’s experience.

See the conversation here.

Sample Prompt You are an AubGPT. You guide … … … Last conversation summary: [Level of student: Beginner; Topics discussed: Inheritance and Abstraction; Next topic to be discussed: Encapsulation]

The point to be noted here is that the summary was generated by ChatGPT, and we may need to build a mechanism to send a [Summarize] command and append it to the initial prompt.

Also read: Unveiling the Basics of Natural Language Processing

Multiple advantages of efficient token usage in AI conversations are transforming the way we interact with artificial intelligence at its core. Token optimization can help developers by unlocking many benefits, thereby enhancing the entire experience for users and businesses alike. Here are some of the advantages:

Cost Savings: Optimizing tokens transcends directly into cost savings. It is essential for the developers to manage the tokens efficiently as it will help them in several ways. Most importantly they can make the most of their API usage by significantly reducing the number of tokens consumed per conversation. This process increases the value derived from each API call and reduces the associated costs remarkably.

Faster Response Rate: Token optimization leads to decreased response times, thus increasing the speed of the entire process and also enables dynamic and interactive conversations with AI models. The efficient use of tokens decreases the processing time requisite for each API call; hence, the response rate becomes fast automatically. The user is able to experience a seamless and engaging conversation with AI as the to-and-fro movement of instruction and response is exceptionally quick.

Scalability: A well-organized token usage is essential for developers. It plays a crucial role in raising scalability as well as in accentuating the accommodation of a larger user base. Developers can improve the quality of their work and speed remarkably with token optimization. They are also able to utilize limited resources quite well with that as the optimization allows AI systems to handle multiple conversations simultaneously. The platforms and applications require this kind of scalability; especially those that have to continuously manage AI-based high traffic and continuous interactions.

Enhanced User Experiences: Token optimization has numerous advantages. Alleviating the quality of user experience is one of them. It acts as an enabler for longer, rich-quality contextual conversations. Effective token management provides an easy extension to the conversation whilst maintaining the originality of the context. This builds up a strong foundation for meaningful, deep, and engaging conversations with AI systems. It leads to better understanding and continuity.

Improved Conversational Flow: Token optimization also improves the flow of conversations. It leads to efficacious utilization of tokens and thus allows developers to initiate organic humanized cohesive and lucid interactions.

Generating effective communication synopses is a vital step of token optimization in AI conversations. Many strategies and innovative techniques can be implemented to generate precise and useful summaries that showcase the crux of the communique. Let’s discover a few of these techniques that enhance the summarization process:

Predefined Summary Formats: There’s one technique for conducting summarization of conversation which involves usage of predefined summary formats. The designs of these formats are curated specifically to capture crucial information bits like the understanding level of the respective user, topics chosen for discussion, and themes of upcoming topics. With a structured process flow, developers are able to cite and present essential information pieces in a precise and uniform manner.

AI-Generated Summaries: AI-generated summaries automate the summarization process in the most efficient and smooth way. They utilize natural language processing techniques as AI systems are able to analyze the interaction history. Basis the same they are able to deduce the most appropriate and critical parts. Thus, the manual efforts required for creating summaries reduces starkly and the system also becomes more adaptable to diverse variations.

Combination of Automated and Human Review: The most advantageous method for summarization of communication involves a balanced approach of automation and human review. The automation can help in creating initial synopsis, which thereafter has to undergo expert human review and subsequently suggested refining. The review is mandatory as it ensures precision and consistency as the prospective misalignments or errors are removed or corrected. This kind of approach is highly preferable as it involves the strengths of AI algorithms as well as human thought processes and decisiveness. Thus, the end result is quality driven and highly reliable.

Also read: Exploring the Mechanics of OpenAI Gym: A Comprehensive Overview

Careful deliberation and adherence to best practices are important pillars for creating and implementing effective token optimization for communication summarization. The below recommendations will help developers in ensuring the accuracy of the results:

Selecting Appropriate Summary Lengths: When working on generating conversation summaries, a balance needs to be created in retaining concise yet contextual information. While keeping an eye on the length of summaries, it is important to seek that no important information is lost in the process. Carefully evaluate the summaries for length and core both as per respective use case. Even though shorter summaries save tokens, there’s a looming risk of losing out on valuable insights and detailing. On the other hand, longer summaries utilize more tokens and also have an impact on responsiveness.

Handling Complex Conversational Flows: Some conversations include complexities in terms of branching and flows. In such cases, it is crucial to ensure that the summaries must contain relevant context. The complex flows may include conditional statements, loops, or multi-threaded conversations. Here the role of the developers becomes even more critical as they have to think of such token optimization designs that can handle complex flows whilst maintaining contextual intricacies. The synopsis should capture necessary information from all the branches and enable meaningful accurate replies.

Ensuring Accuracy and Contextual Understanding: For an AI model to be successful, it is essential to maintain the accuracy and contextual understanding of the AI model. Regular evaluation and improvisation of token optimization strategies ensure maintenance of the core context of the communication in the summaries and subsequently aligned quick and accurate responses. With systematic testing and in-depth validation, the AI model can function effectively as well as lead to relevant replies.

Continuous Monitoring and Improvement: Token optimization is a continuous process that requires constant monitoring and improvisation. One needs to conduct a performance analysis recurrently and also of the strategies going behind token optimization. The major metrics to watch out for can be response efficiency, user satisfaction, and effective token usage. It is essential to implement all the feedback received from users and regularly improvise token optimization methodologies to alleviate the overall communication experience.

Thorough Documentation and Communication: It is pertinent to maintain a record of your token optimization strategies and share them effectively within your core team of development. The process should contain clear instructions and guidelines as well as essential precautions to be considered with respect to token optimization. One should share acumen and best practices to enable coherence all throughout the development lifecycle. A methodology incorporating clear documentation and effective communication help developers develop an understanding of the rationale behind the entire process, various decisions, and execution.

Advantages and challenges go hand in hand. The same applies to the optimization of token and communication summarization. So, to apply the techniques discussed above, there are a few contemplations to be considered:

Context Preservation: The primary challenge that every developer faces is the preservation of the original context within all the interactions. The tokens have a certain limit to their capacity for retaining and representing the information. Hence, the risk of losing the important core of the conversations keeps on looping while generating a synopsis. So, it becomes all the more essential to make sure that the summary contains all the crucial bits so as to manage the ensuing queries with high accuracy.

Summary Length Limitations: The duration of the communication precis is also one of the factors that developers have to weigh in. Even though summaries have the intention to abbreviate the interaction content, there’s a limitation to what an AI model can process as meaningful synopsis within the limitation of length. Longer summaries lose the grip of tokens for response generation and may lead to incomplete or inaccurate reverts.

Trade-Offs and Accuracy: Optimization process of tokens involve a trade-off between usage and accurate response. The reduction in token volume can provide an advantage in terms of cost-benefit and quicker turnaround time. At the same time, an excessive reduction may lead to lower quality as the AI systems are unable to comprehend the information well. Hence, it is essential to develop a balance so as to reach a token optimization level that also provides the desired accuracy.

Training and Adaptation: The execution process of optimization also requires specialized training that can help with AI adaptation. As the usage patterns of tokens vary, the AI models need to update to handle conversation summaries. Certain refining is necessary to make sure that the AI system can adapt to the new variations and still provide the required performance level.

User Experience Considerations: Out of all the challenges that may present themselves, the most critical is maintaining the quality of user experience. Token optimization should not cause any negative impact on the way users engage with the summaries. Their experience should remain seamless and lucid. Any abrupt variation, change, or confusion should be avoided and no important piece of interaction should be lost. The responsibility of developers increases as they have to ensure a smooth flow of communication and well-maintained accuracy all throughout the engaged duration.

With AI becoming the norm in each platform it would be necessary to take steps in optimizing the way we use the prompts with creative prompt engineering. With just the above example in the ‘Optimizing Tokens with Summarization’ section, you can see that 890 tokens were saved for the contextual conversation! Imagine this optimization applied to each of their upcoming conversations and this multiplied to the entire user base of, let’s say, just a 1000.

In today’s tech world, artificial intelligence has become integral to numerous industries, ranging from healthcare and finance to marketing and customer service. As businesses increasingly rely on AI-powered solutions, it becomes imperative to emphasize the importance of prompt optimization and prompt engineering in AI development. These practices hold the key to ensuring that AI systems produce the desired outputs, enabling businesses to leverage the full potential of AI technology.

At Aubergine Solutions, we specialize in crafting top-tier software solutions that harness the power of artificial intelligence.

Whether it’s fine-tuning your chatbot capabilities, optimizing your virtual assistant’s performance, or refining customer support systems, our skilled team can offer guidance and advice to achieve efficient token usage while enhancing overall conversational flow.

If you are ready to elevate your conversational AI experience, then contact us for assistance.